What Do Production ADAS and ADS Systems Tell Us About the Migration to Collaborative Driving

- FISITA

- Apr 14, 2020

- 13 min read

Updated: Feb 21, 2021

The FISITA Automotive HMI Online Conference was moderated by Christophe Aufrère, CTO of Faurecia, with speakers Bryan Reimer from the MIT Center for Transportation and Logistics, Philipp Kemmler-Erdmannsdorffer from NIO, and Omar Ben Abdelaziz from Faurecia.

The following transcript is taken from the presentation by Bryan Reimer of MIT on What Do Production ADAS and ADS Systems Tell Us About the Migration to Collaborative Driving.

Christophe: Now let me introduce the first speaker. The first speaker is Bryan Reimer, who is a brilliant research scientist in the MIT Center for Transportation and Logistics, which aims to find solutions for the next generations of human factor changes. He also founded and leads the Advanced Vehicle Technology Consortium and he got also different innovation awards so now the floor is Bryan’s. Thank you Bryan, and good luck with your presentation.

Bryan: Thank you for the introduction and I hope that everybody is in good health and safe in these turbulent times. I’d like to talk a little today about what production advanced driver assistance systems and automated driving systems are telling us about the migration forward on our path of automation towards one that I would consider of collaborative driving versus autonomous driving.

Most importantly I think that we need to look back for a moment. The history of our pursuit of driverless technologies is not new. All the way back in 1926, in the context of remote control, the Milwaukee Sentinel in the U.S. documents the first move towards driverless vehicles. In the 1950s, GM invested copiously in this topic. In the 1980s several prototypes. The modern era of driverless and automated technologies really was kicked off with the DARPA challenges in the 2000s and the introduction of the Google self-driving car program just over ten years ago. In the ten years since, we’ve invested countless billions of dollars in the pursuit of this technological marvel. What’s next? I’d argue it’s a drastic shift in how we live and move, but it is one that will not clearly take the driver out of the driver’s seat anywhere as quickly as the forecasts five or ten years ago may have presumed.

When we think to the context of what autonomy means to different people, there are a number of different viewpoints in this topic. As I highlighted in a piece that’s in my Forbes blog in 2018 we haven’t yet begun to agree on what are we trying to achieve here. Are we trying to achieve vehicles and vehicle systems that just encompass hands-free driving – many of the vehicle systems that are being produced today. Or are we really looking for Robo taxis, autonomous mobility, on-demand services, with or without safety drivers. Delivering packages or moving people in a neighbourhood or city. A mobility ecosystem that provides seamless intermodal connectivity, moving people from home to the subway or steel-on- steel rail that is far more efficient or – most importantly as I would think of it – how do we begin to target a safety threshold that moves us towards the context of vision zero? Because living and moving safely is perhaps the ultimate target we should be looking to achieve. Now obviously there’s different elements of the mobility ecosystem trying to pursue all of these components. These are big complex problems in and of themselves. How do we begin to target where are we trying to achieve success so that we can find a successful end game in the quest for mobility?

The hard part here is that we as drivers – humans sitting behind the steering wheel – have a really difficult time understanding the human-machine characteristics of what our new role may be. As humans we’re really attuned to understanding: are we driving, or are we riding? Am I responsible for the operation of this vehicle or am I a passenger in the vehicle along for the ride? Now, as we automate more, we move forward in our pursuit of collaborative driving, we are going to need to define some middle points here where driving doesn’t necessarily mean driving the traditional way. However, we need to educate the populace well beyond SAE 3016 to understand what an individual’s role may be at any given point in time and it very much is the human machine interface that is critical to providing that context understanding and it is an understanding I will discuss in a moment here around data and why that understanding is difficult today. It needs to be iterated forward over time if we are going to successfully deploy all the technologies we are working on. So how do we move people from understanding the foundations: from traditional driving, to modern driving, and how riding may very well be different.

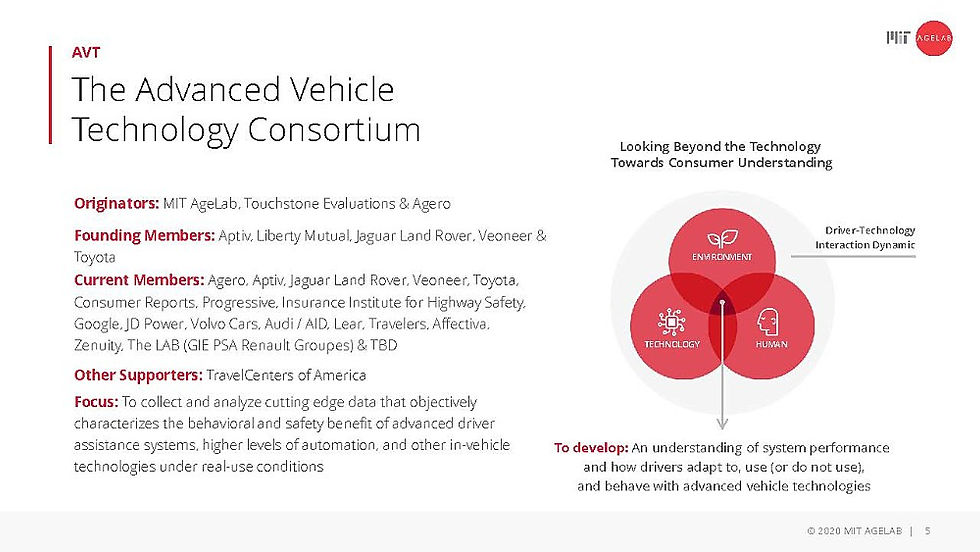

So, about five years ago, I led the development of the Advanced Vehicle Technologies Consortium to focus on collecting and analyzing data on how people are touching advanced driver assistance systems and other higher levels of automation in production systems in the field. So how do we get an understanding of system use? How drivers are adapting to use systems, or not so, so that we can understand how they are behaving and begin to iterate designs of HMIs and the driver technology interaction dynamic that is happening on the roads forward faster. In essence, how do we get the industry to collaborate more strategically; learn from data, learn from each other so that we can engineer systems more effectively over time. The consortium is now supported by four OEMs, several suppliers, insurers, leading technology companies such as Google, and some of the key safety stakeholders throughout the world including HIS, JD Powers, Consumer Reports, working together collaboratively to try to understand the complexities of system design development on our roads. So let’s dive into a little of the data for a moment.

Most importantly, and I’m going to switch over to the complexity of video today. Hopefully you can watch this clip. Let’s cross now.

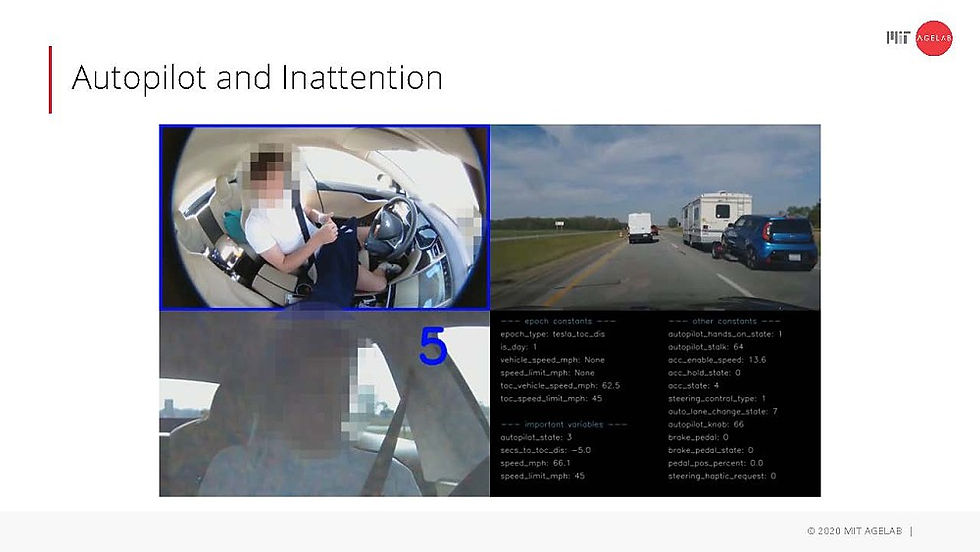

The largest supplier of production level automated driving systems is clearly Tesla at this point with a number of traditional OEMs quickly moving behind, but it’s very much not the automation that Tesla is providing – and I’ll play that clip again – that’s the issue it’s very much the interface to that automation. By any count, autopilot is a sufficiently flawed automation system. It’s not perfect. It cannot drive autonomously. It cannot handle the complex cut in the construction zone in this video. It’s not expected to, but how do we ensure that the driver of that system understands that, is ready to take over – attentive to the situation around them, not fixated on doing things in the vehicle – to ensure that we are developing a mobility framework enhanced in safety? Unfortunately, the complex designing of appropriate technology and deployments in the car is incredibly complex. It’s difficult to understand how people are going to use, not use, appropriately trust, and inappropriately trust technology on their own. It’s no myth that with automation comes a responsibility for enhanced education. The aviation community has known that for decades.

So when I look at other vehicles we’re studying – Cadillac's Super Cruise for example – we see in this video clip right here a driver’s first experience with Super Cruise: an automation technology that may very well be stronger than autopilot from an automation perspective, but it’s far more advanced from an interface perspective. I’ll repeat that again so Super Cruise – while many think of it as an automated driving system – is really a system designed around perhaps one of the strongest HMIs developed to date in the vehicle. Lead researcher on it Chuck Green works part-time with us now. Retired from GM not so long ago. The behavioural adaption system in Super Cruise is very unique. The system in particular is designed around an attention management strategy that is coupled with the automated driving system, so I think that is very important to understand. Literally building the automation path planning control to work coherently with the attention management system.

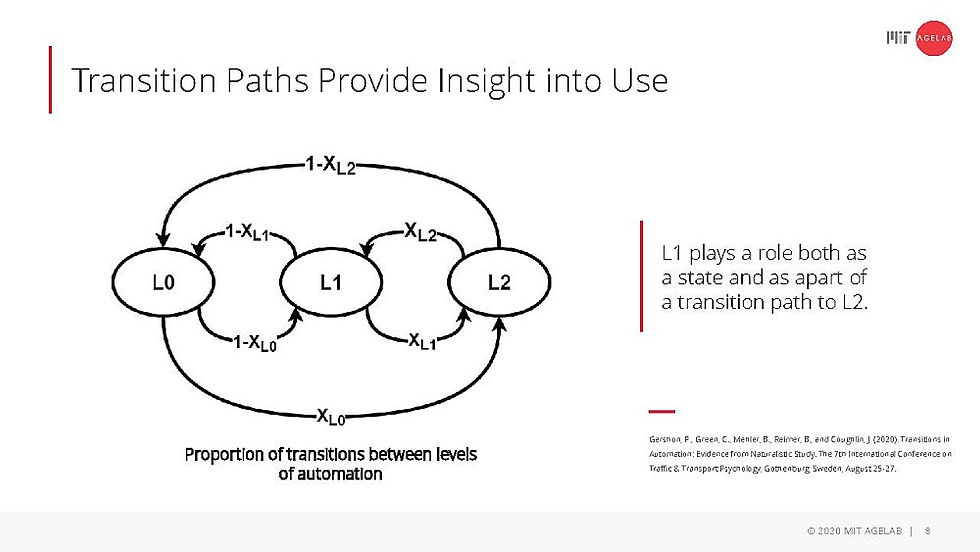

So we begin to think about a transition and pathway to appropriate use. How people move from Level 0, manual control, to Level 1 ACC (Adaptive Cruise Control) and over to Super Cruise Level 2, we see that Level 1 plays a very important role in how people learn about the technology; how they transition to the Level 2 state; and how they transition back; and that interfaces, in particular, if developed to support driver attention, to motivate drivers, can be used cohesively to include them in that collaborative relationship and promote appropriate uses of the technology. I’m not saying that any development today is perfect. I’m not saying that the second or third generation of Super Cruise that that many are working on beyond GM won’t be better than the first. I’m just saying that the unique characteristics of this system as it’s designed are quite effective in promoting the appropriate use of the technology. So let’s look at two specific components of this in video in particular.

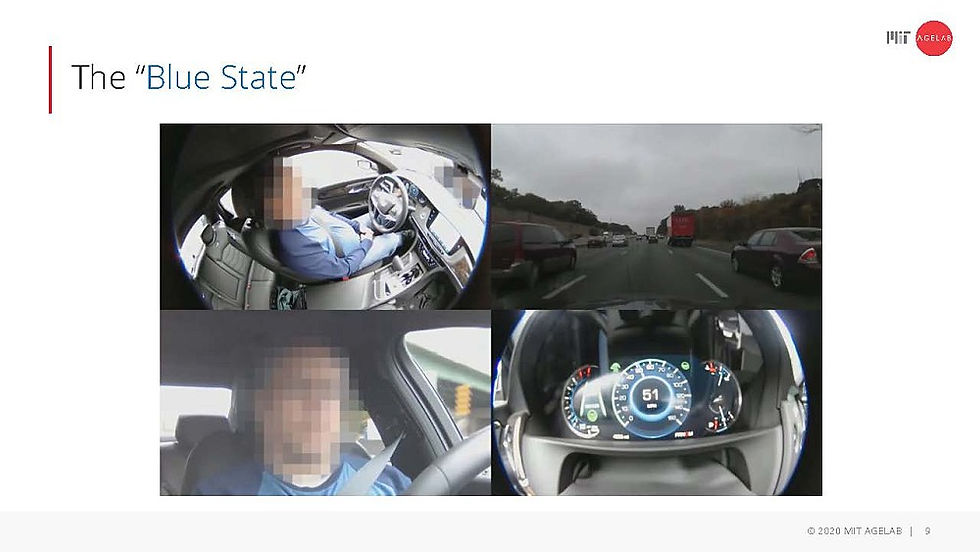

So let’s call it, it’s designated the blue state, or the manual override state. In this case when the driver here decides that they’re going to change lanes, the automation is overridden and naturally re-engages to the green, or happy, state when it finds the new road piece. Again that results in interface changes in the instrument cluster that results in interface changes to a high amount light on top of the steering wheel, cohesively providing the driver a role in operating and motivating the automation. On the other hand, there is also states for emergent behaviours such as the red state asking drivers using the mental model of “red is bad”. Hey it’s time to take over the automation is not supported in this operating context of a cut-in. Okay red warnings go up, the alarms go off that I’m not playing here: driver who needs to be attentive. What I’m not going to talk about cohesively today for time is the fact that there is an attention management system in here with driver monitoring, any management system encouraging the driver and mandating that they look at the road every six seconds, with the carrot being the automation use, and the stick being I’m going to take it away and lock you out if you don’t pay attention, and so that carrot and stick approach that we believe is very strong in motivating appropriate use of the technology.

So I believe strongly that there are a number of other OEMs that have learned this – you know, the fact that we need to motivate technology use and are moving there in the design characteristics.

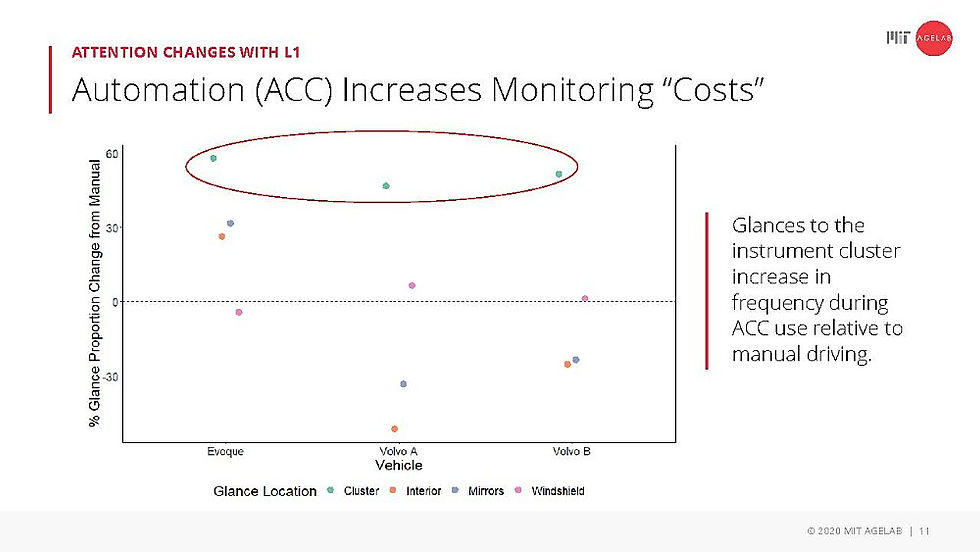

So one thing that we’ve also highlighted here in a context here of assisted cruise control is that when drivers are monitoring, there is a cost. Even level one ACC increases across three different vehicle characteristics here: a Land Rover Evoque we started some work with, Volvo’s Pilot Assist both the version A we’ll call it and version B. ACC increases monitoring cost to the instrument cluster by over 50%. What’s the state of the automation I need to attend?

Looking further when Pilot Assist is engaged increasing that automation monitoring cost over almost 200% and 50% increase in glances to the interior of the vehicle. Not surprising version B is really the effective system – a topic for a later date.

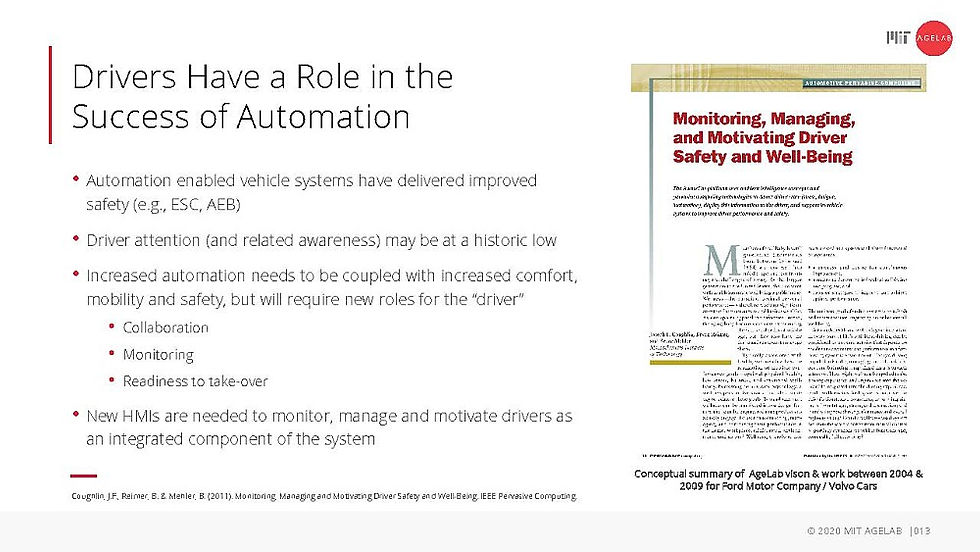

the key here is that drivers have a role in successful automation. We’ve seen this before. It’s not new. Automation enabled systems such as Electronic Stability Control and Adaptive Driving Beam (ADB) are working to improve safety on our roads; they’re not trying to exclude drivers from the decision process. They’re learning, engaging and motivating them. Yes, automation is playing a safety role. Driver attention, and perhaps the related situational awareness because of distraction in our lives, may really be at historic level that means we are pre-attuned not to attend to the road when we are being supported by automation. Thus increased automation needs to be coupled with increased mobility and safety. When we automate, we need to think about the driver’s new role in collaboration, in motorist monitoring, and being ready to take over – whether that is L2 or L3. To do this successfully we are going to need new human-machine interfaces, I’m a strong proponent of monitoring, managing, and motivating drivers, and from some of our early work done for Ford, in the 2005 to 2009 range, we began to outline some of those contexts: a driver is an integrated role – the aviation industry discovered this among pilots in the early 80s. Replacing pilots was not the answer, augmenting the automation and cockpits with pilot skill is where we have gotten to in aviation safety today.

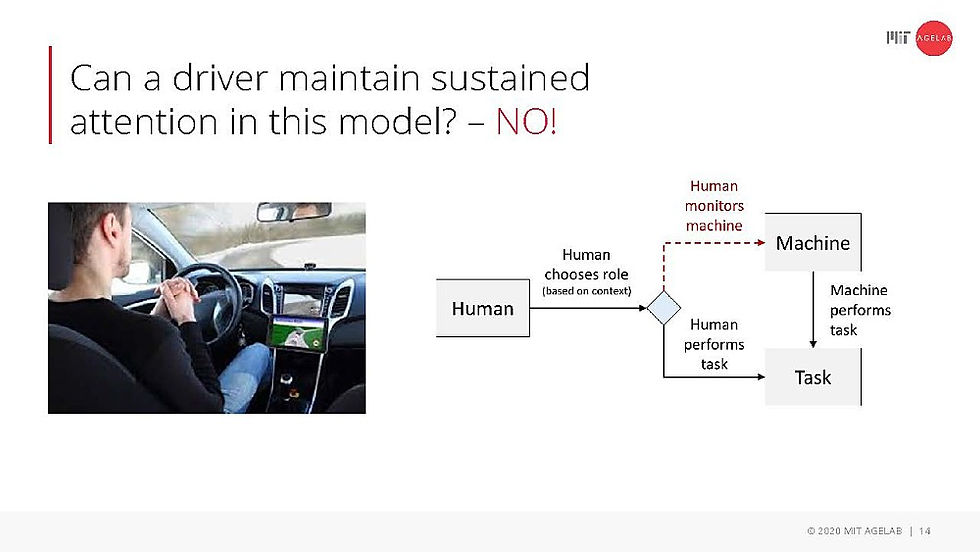

So can the driver maintain sustained attention when they’re making a decision at any point whether I should monitor a machine or should I drive? The answer is very much no. We can’t do that and that is one of the reasons that when we look at the deployment of Tesla’s autopilot, drivers are struggling so much.

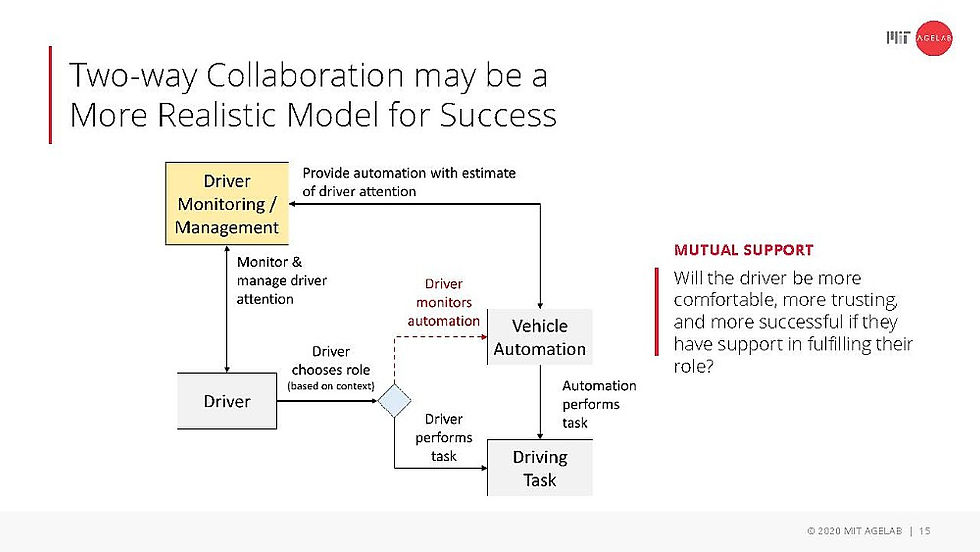

The key here is two-way collaboration: bringing driver monitoring and management into the equation so the system is monitoring and managing the driver and understanding what the driver is attending and the vehicle automation knows what the state of the driver is and where they need to be supported – it’s mutual support and that support can lead to a more comfortable, more trusting, and more successful relationship with the driver fulfilling their role and the automation supporting them to make driving more comfortable and convenient – a topic we can talk extensively on at a later time when time allows.

So the real key here is a deeper understanding of attention management. Really trying to manage driver workload in any given point in time traditionally these alert dots occur to alert them when their inattentive or fatigued and ensuring to calm them when they’re actively distracted or overloaded. Perhaps we really have been looking at the entire distraction problem all wrong? We need to look at it holistically across the system as an attention problem. What we classically thought about as distracted-driving – picking up your cell phone and driving – is really filling the attentional void of driving being too boring. We can help drivers fill that role strategically in a more safety conscious way. In essence, looking at the driver problem as an attention management problem as opposed to a driver distraction problem or a driver monitoring problem. How do we help the driver at any given point in time make better decisions, looking at the road when they need to, and getting some of what they want done while they’re driving, threaded in between.

Some work that we pursued as part of the MIT-AHEAD consortium over the last seven or eight years really looks at this in the context of an attentional buffer encouraging drivers to look up frequently enough to acquire the situational understanding needed to make downstream decisions. Some of the work here is really pointed to when you look at the relationship of time looking away from the road with time looking at the road, you can begin to see divergences in attention that are predictive of crashes in ways that the industry has not seen before. The foundational model can be used to design interfaces much more coherently than global standards do today. In essence following the context of interruptability and encouraging drivers to look at the road long enough to acquire the information needed to make downstream decisions. We often look up for a brief second seeing that the environment hasn’t changed and look back down too quickly, failing to see changing threats in the world around us.

So when we look forward, advances in HMI will be critical to supporting both drivers and over time riders. As we automate more, information needs will increase. Drivers need to know where the system is working and why. They need to be educated to build trust over time. Trust erodes quickly: we need to build it and encourage it. We need to build new infotainment displays, new visual displays. The picture on the right from Veoneer’s learning intelligent vehicle that’s been demonstrated at CES in the last few years. Mode awareness, mode confusion being things that we can use visual information to help support. We need to look at more effective multi-modal interfaces. Haptics are incredibly important, visual indicators, high-end dashboard, auditory cues only when needed. Innovate forward in the context of driver state management, helping drivers make better decisions. I’d also add that we need to innovate in external human-machine interfaces (eHMI) – the external side to communicate expectations to potentially salient situations. Advertisement space will become critical. So it’s really the innovative creation of communication infotainment and safety systems through synergistic HMIs that is critical to the automated, electrified and connected mobility system that we are all changing. It’s all about how you connect it to us, the user.

So in closing here, the future may be autonomous and then unfortunately the full context of autonomous mobility could be the best part of a century away, the next few decades will be ones of collaboration. Significant changes to how we live and move will take decades to unfold. We will see higher levels of automated vehicles emerging, whether that’s delivering groceries, picking up passengers and the improved business case of how to make money here will evolve. The key may be managing consumer expectations through this process. Right now drivers are looking for a riding experience that you’re not going to find in a neighbourhood anytime too soon. Ensuring that when they go to the dealer to buy their next car their expectations are grounded because the industry needs to continue to excite and delight customers. Well-conceived policy or industry collaboration is needed to ensure appropriate standardization across levels and platforms. I’m not sure we know what to standardize now, but we do know how to build guardrails to keep this industry moving safely and securely forward through the automated development processes. Connecting the human to this is critical and human-centred engineering – through the design, development and deployment of these systems – is critical. It’s all about trust. We need to build a trusted, safe, mobility system. We can’t walk out on our roads – much like the global pandemic that’s around us – worried about what’s going to happen. Safety and security need to be key. So I believe strongly that collaborative driving deployments can become at least for the foreseeable future, into the next several decades, a saviour to the automotive industry. There’s no mystery that robo taxis will be driving around a century from now, but let’s look at collaborative systems much like Super Cruise is illustrating we can do GM’s context, but many others are following to how we motivate drivers from the traditional context of holding the wheel to the new world forward of collaboration. And I think it’s important, as highlighted in the Forbes piece that I just posted back in January, that we need to take initiative, Tesla leading the way to better monitor and manage driver behaviour and calling for the industry to work together to develop deployment standards there and with that I will close and look forward to the question session later on.

The transcripts for the other three parts of this Online Conference are available as follows:

Christophe Aufrère, CTO of Faurecia: FISITA Automotive HMI Online Conference Transcript: Introduction and Q&A

Philipp Kemmler-Erdmannsdorffer, NIO: Envisioning Future Mobility: Redefining User Experience

Omar Ben Abdelaziz, Faurecia: Cockpit for the Future

Originally broadcast on Wednesday 25 March 2020 at 14:00 GMT, the FISITA Automotive HMI Online Conference explored the interactions between human and machine in an automated car; the technology to sense, measure, control and close the loop between the human and the car; and the human-machine interfaces that have already been implemented, such as voice control, as well as some others yet to be realised.

There were 272 registrations for the conference, with 234 joining the session for some of the time and all of the others watching the replay.

In addition to the 28 who didn’t attend, but watched the replay, 45 others who did attend also watched the replay. Of those who joined the call, 133 were there for over 50 minutes and 168 for over 30 minutes.

The webinar attracted attendees from 23 countries with more than 10 attendees from China, Finland, France, Germany, India, the UK, and the US. There were people from over six major OEMs, many Tier Ones and a number of research institutes.

WATCH THE FULL REPLAY: register free of charge for FIEC to access the HMI replay.

Comments